Hey all,

I finally get around to writing about our automated byte signature generator. It’s going to be a bird’s eye view, so if you’re interested you’ll have to read Christian’s thesis (in German) or wait for our academic paper (in English) to be accepted somewhere.

First, some background: One of the things we’re always working on at zynamics is VxClass, our automated malware classification system. The underlying core that drives VxClass is the BinDiff 3 engine (about which I have written elsewhere). An important insight about BinDiff’s algorithms is the following:

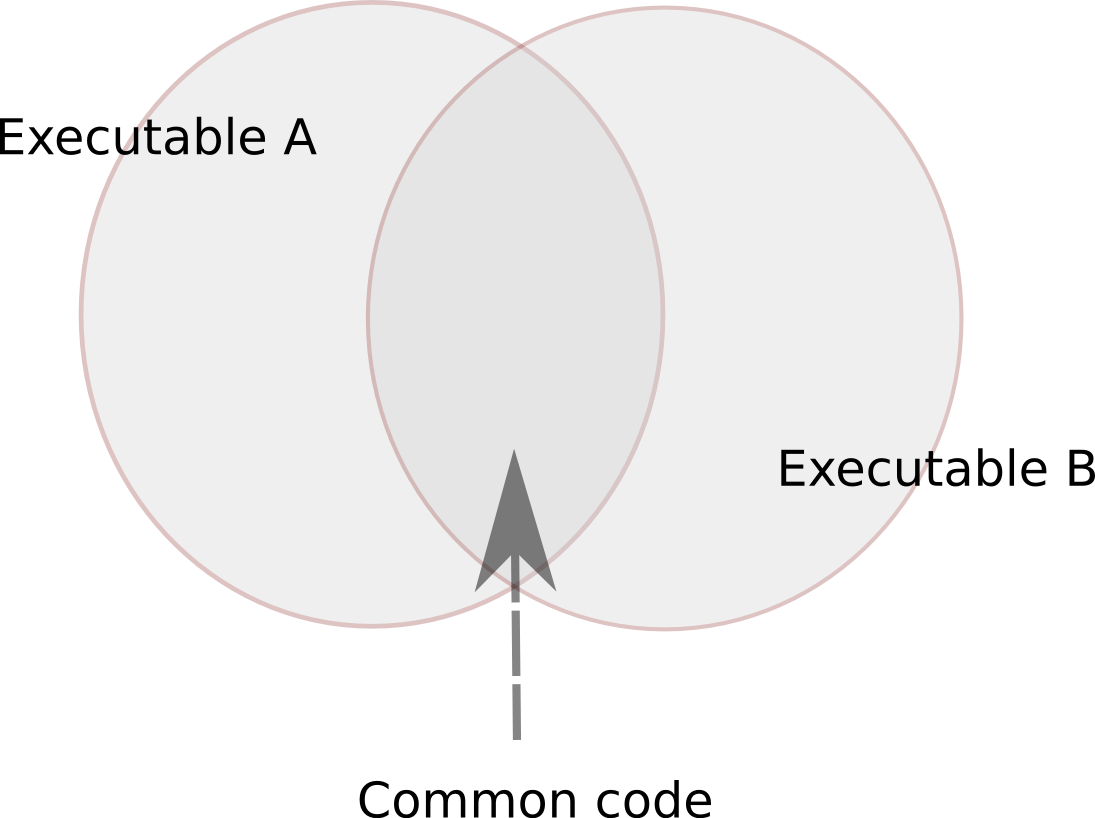

BinDiff works like an intersection operator between executables.

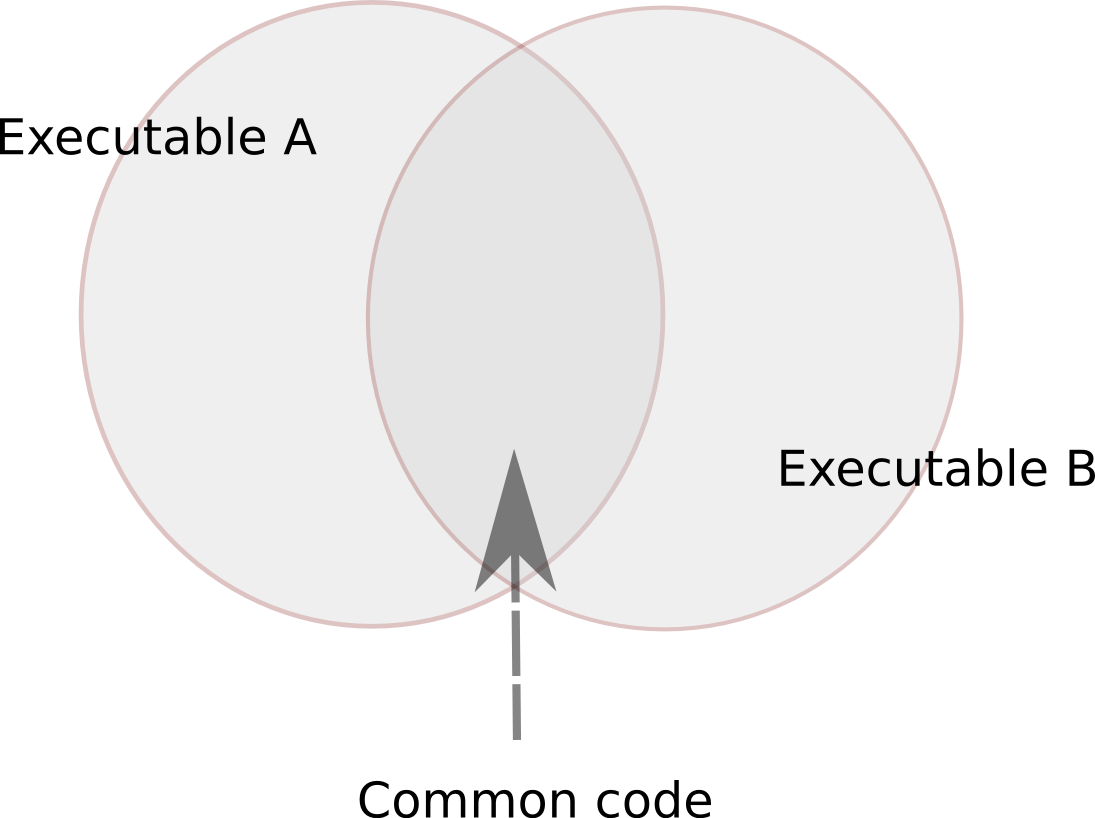

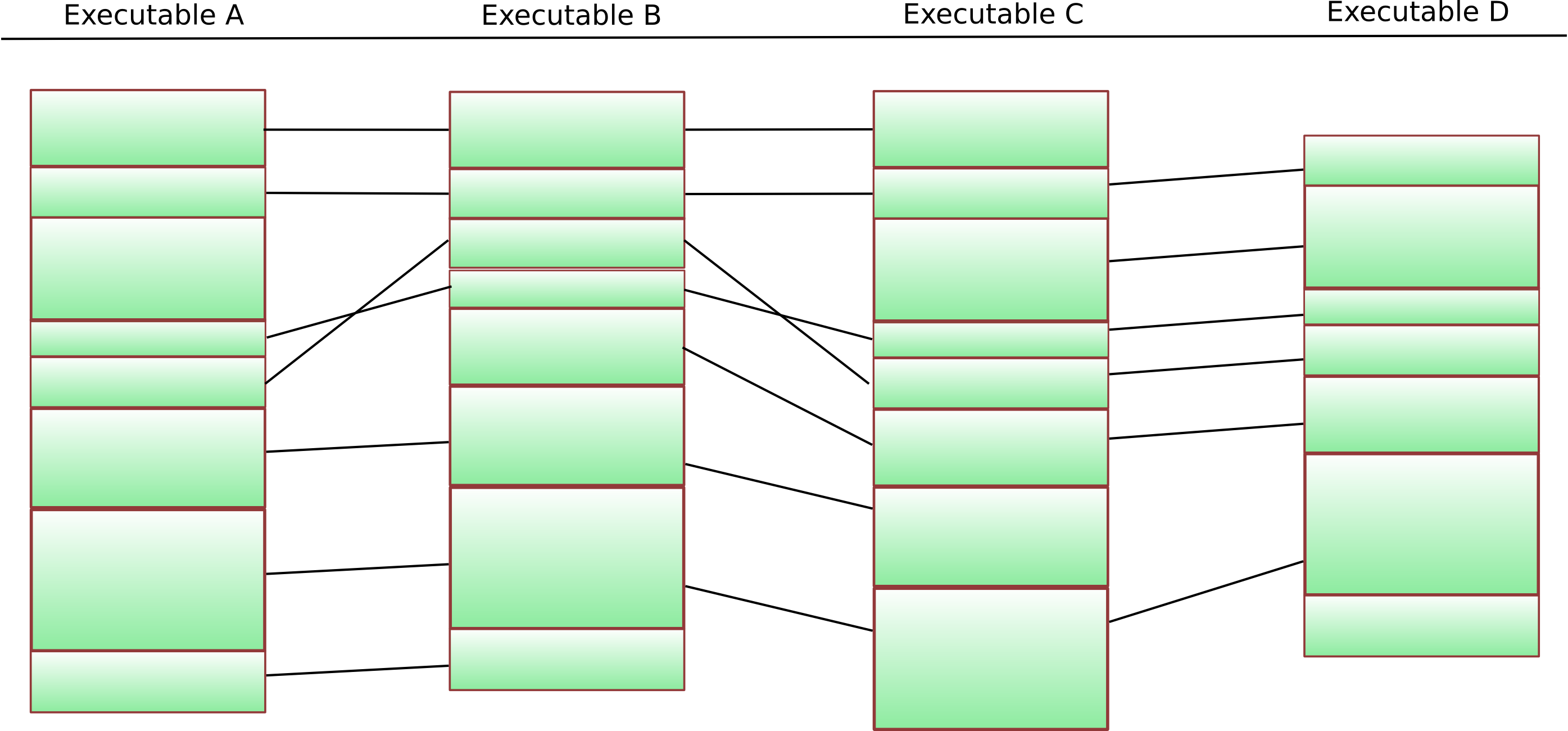

This is easily visualized as a Venn diagram: Running BinDiff on two executables identifies functions that are common to both executables and provides a mapping that makes it easy to find the corresponding function in executable B given any function in A.

Two executables and the common code

This intersection operator also forms the basis of the similarity score that VxClass calculates when classifying executables. This means that the malware families that are identified using VxClass share code. (Footnote: It might seem obvious that malware families should share code, but there is a lot of confusion around the term “malware family”, and before any confusion arises, it’s better to be explicit)

So when we identify a number of executables to be part of a cluster, what we mean is that pairwise, code is shared — e.g. for each executable in the cluster, there is another executable in the cluster with which it shares a sizeable proportion of the code. Furthermore, the BinDiff algorithms provide us with a way of calculating the intersection of two executables. This means that we can also calculate the intersection of all executables in the cluster, and thus identify the stable core that is present in all samples of a malware family.

What do we want from a “traditional” antivirus signature ? We would like it to match on all known samples of a family, and we would like it to not match on anything else. The signature should be easy to scan for — ideally just a bunch of bytes with wildcards.

The bytes in the stable core form great candidates for the byte signature. The strategy to extract byte sequences then goes like this:

- Extract all functions in the stable core that occur in the same order in all executables in question

- From this, extract all basic blocks in the stable core that occur in the same order in all executables in question

- From this, extract (per basic block) the sequences of bytes that occur in the same order in all executables in question

- If any gaps occur, fill them with wildcards

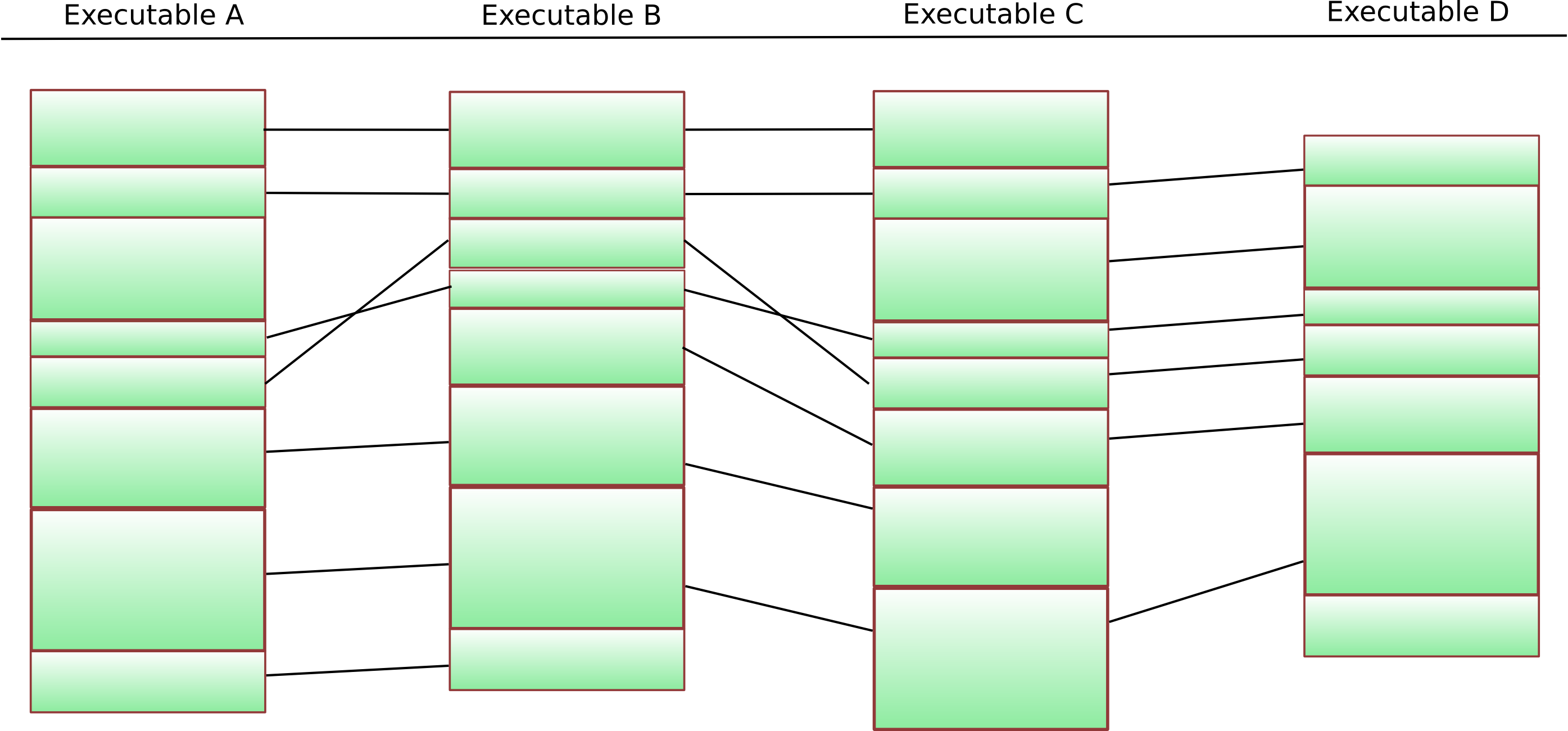

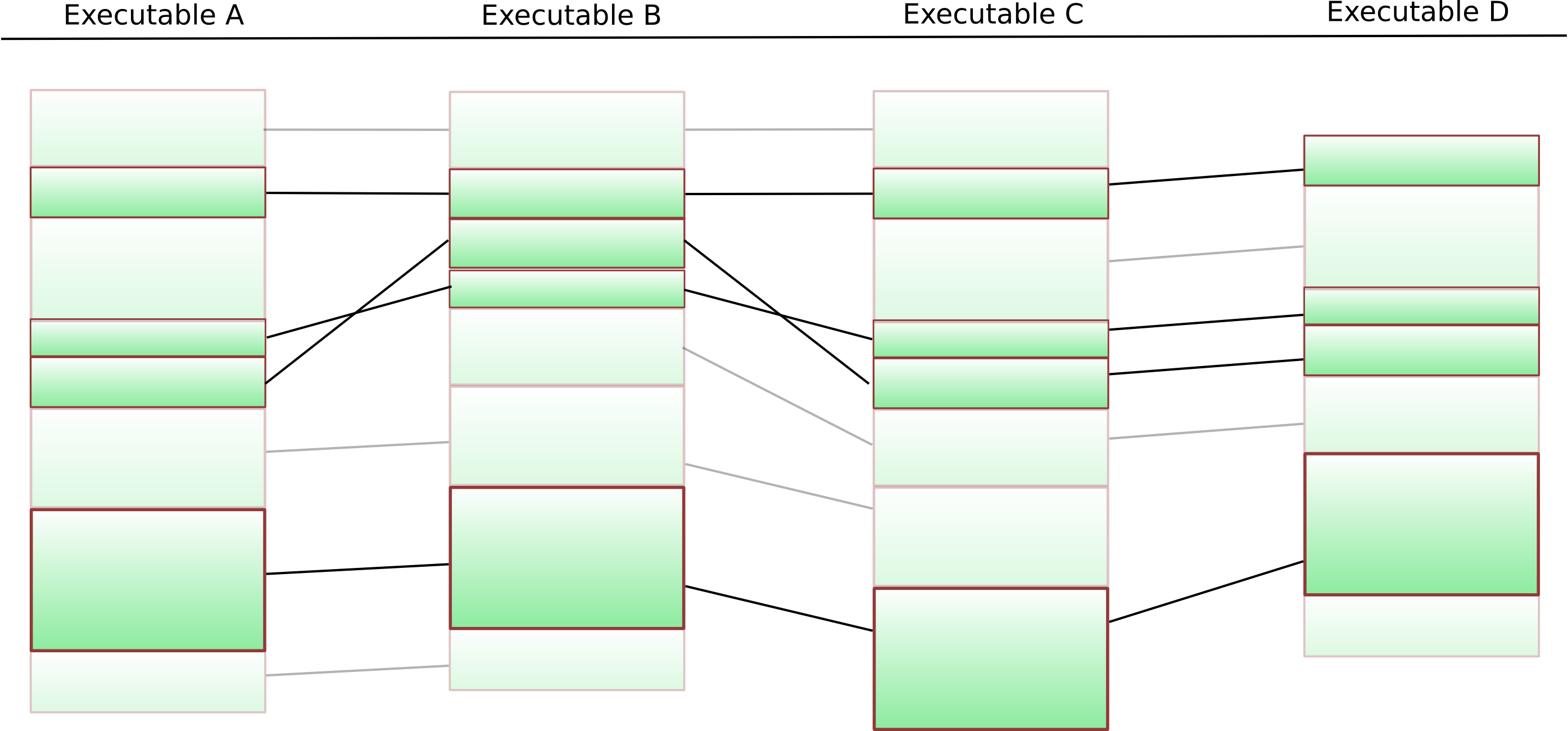

Sounds easy, eh ? 🙂 Let’s understand the first step in the process better by looking at a diagram:

Four executables and the results of BinDiff between them

The columns show four different executables – each green block represents one particular function. The black lines are “matches” that the BinDiff algorithms have identified. The first step is now to identify functions that are present in each of the executables. This is a fairly easy thing to do, and if we remove the functions that do not occur everywhere from our diagram, we get something like this:

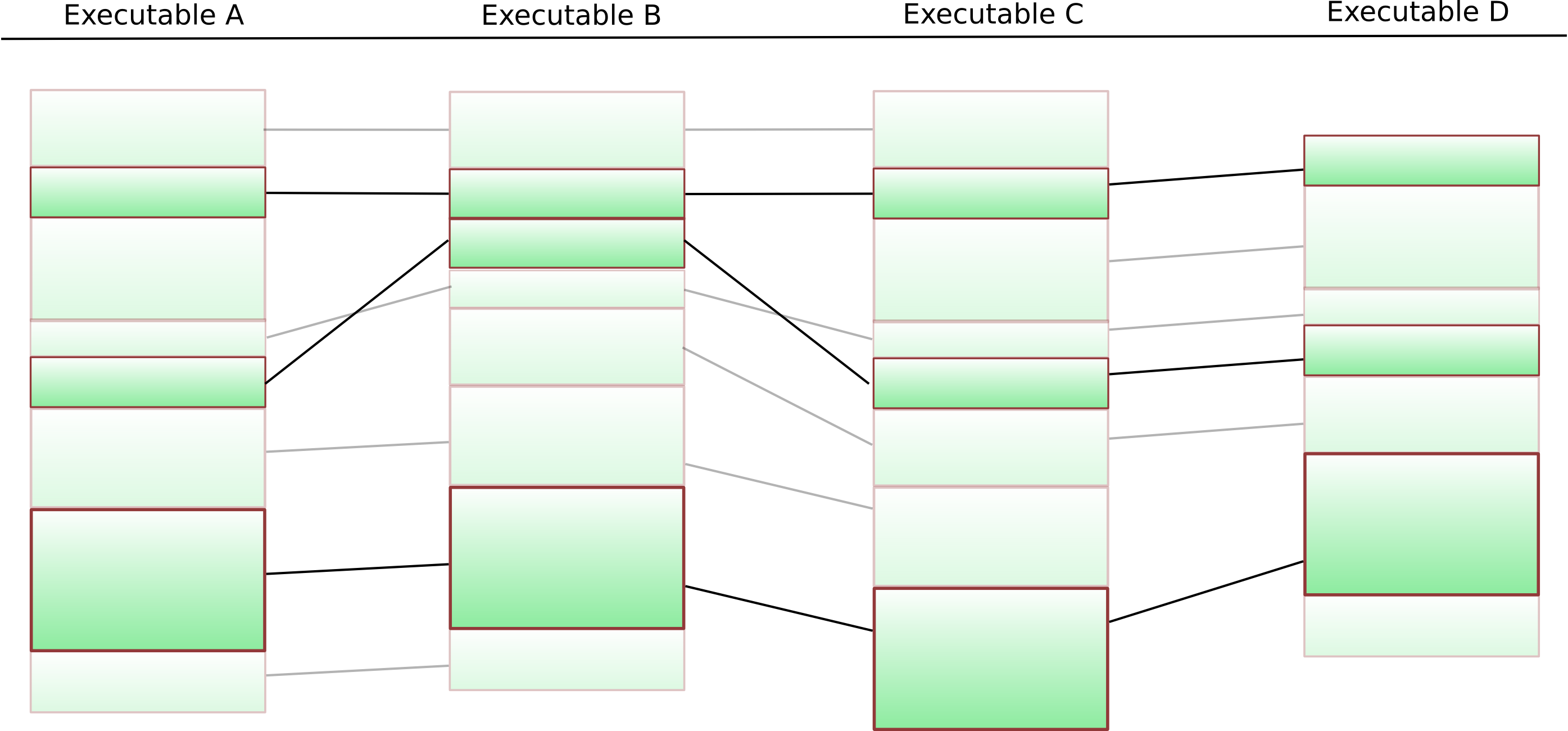

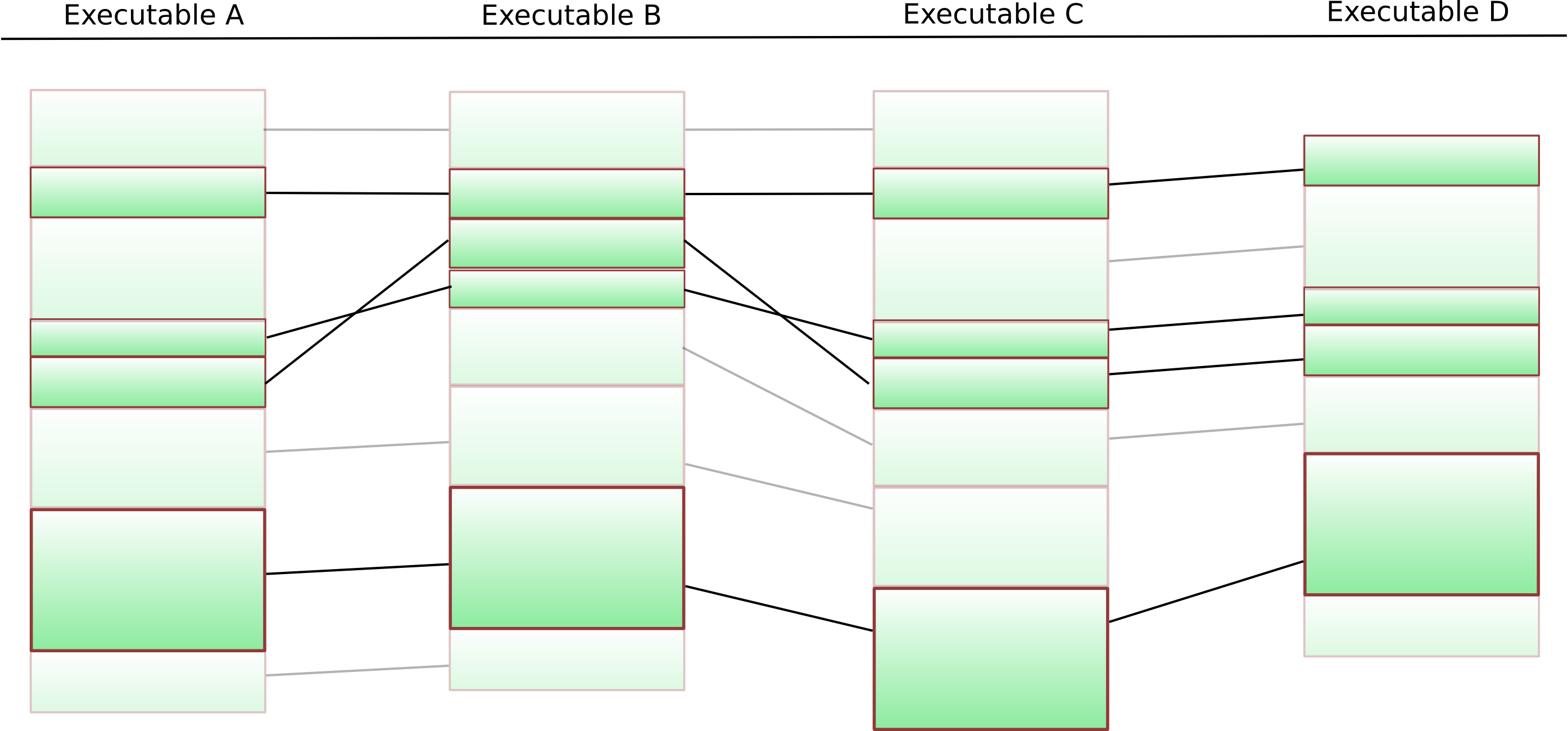

Only functions that appear everywhere left

Now, we of course still have to remove functions that do not appear in the same order in all executables. The best way to do this is using a k-LCS algorithm.

What is a k-LCS algorithm ? LCS stands for longest common subsequence – given two sequences over the same alphabet, an LCS algorithm attempts to find the longest subsequence of both sequences. LCS calculations form the backbone of the UNIX diff command line tool. A natural extension of this problem is finding the longest common subsequence of many sequences (not just two) – and this extension is called k-LCS.

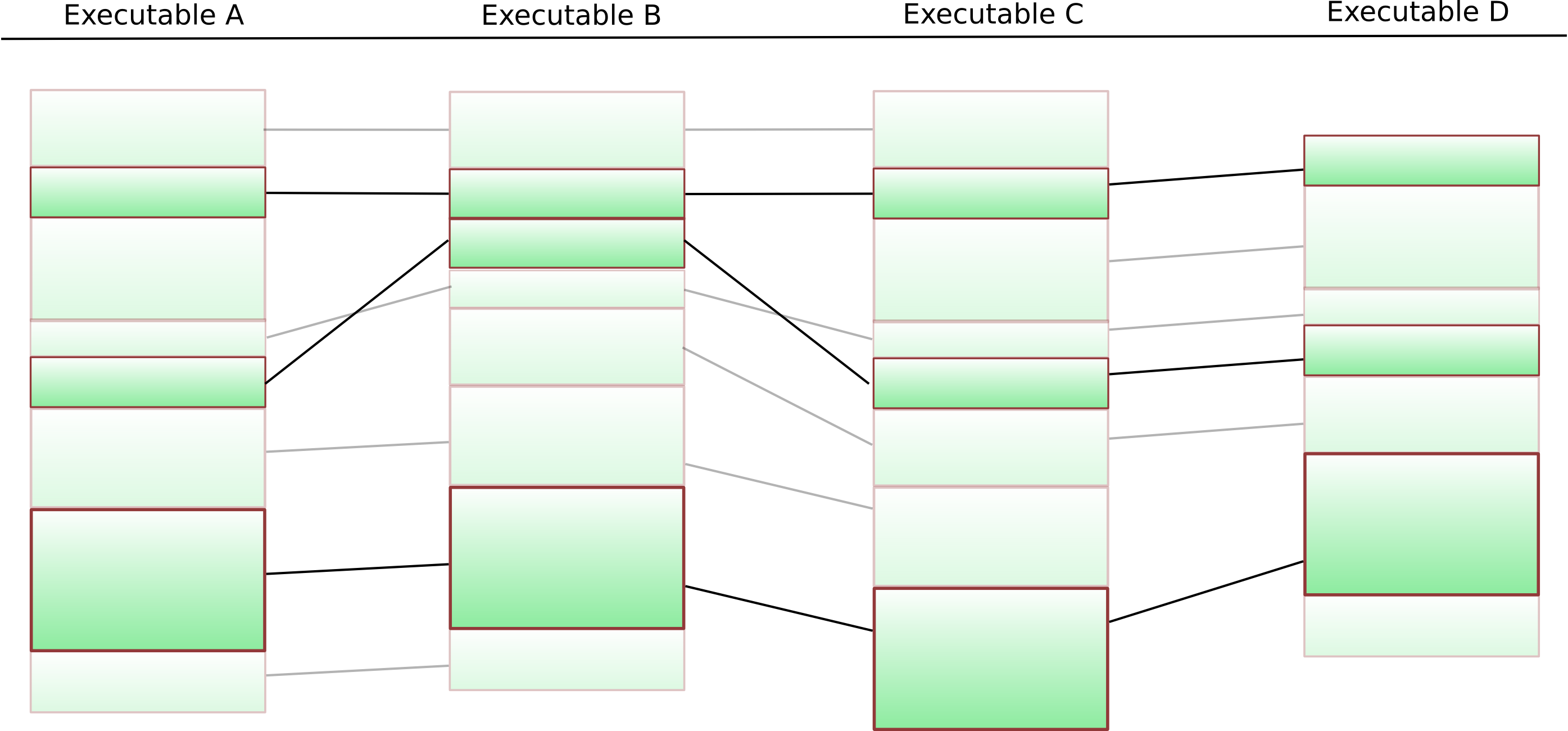

This suffers from the slight drawback that k-LCS on arbitrary sequences is NP-hard — but in our particular case, the situation is much easier: We can simply put an arbitrary ordering on the functions, and our “k-LCS on sequences” gets reduced to “k-LCS on sequences that are permutations of each other” — in which case the entire thing can be efficiently solved (check Christian’s diploma thesis for details). The final result looks like this:

Functions that occur in the same order everywhere

Given the remaining functions, the entire process can be repeated on the basic block level. The final result of this is a list of basic blocks that are present in all executables in our cluster in the same order. We switch to a fast approximate k-LCS algorithm on the byte sequences obtained from these basic blocks. Any gaps are filled with “*”-wildcards.

The result is quite cool: VxClass can automatically cluster new malicious software into clusters of similarity – and subsequently generate a traditional AV signature from these clusters. This AV signature will, by construction, match on all members of the cluster. Furthermore it will have some predictive effect: The variable parts of the malware get whittled away as you add more executables to generate the signature from.

We have, of course, glossed over a number of subtleties here: It is possible that the signature obtained in this manner is empty. One also needs to be careful when dealing with statically linked libraries (otherwise the signature will have a large number of false positives).

So how well does this work in practice ?

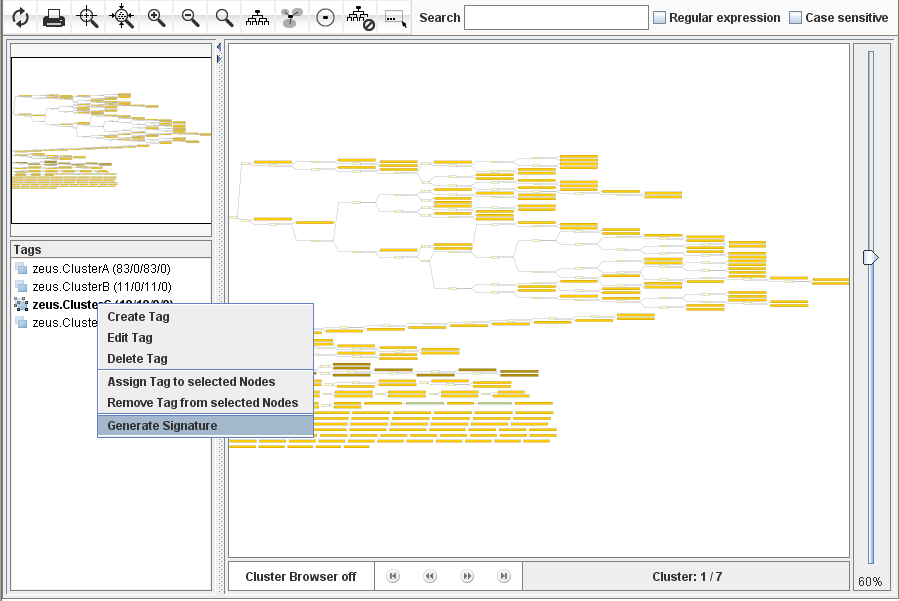

We will go over a small case study now: We throw a few thousand files into our VxClass test system and run it for a bit. We then take the resulting clusters and automatically generate signatures from them. Some of the signatures can be seen here — they are in ClamAV format, and of course they work on the unpacked binaries — but any good AV engine has a halfway decent unpacker anyhow.

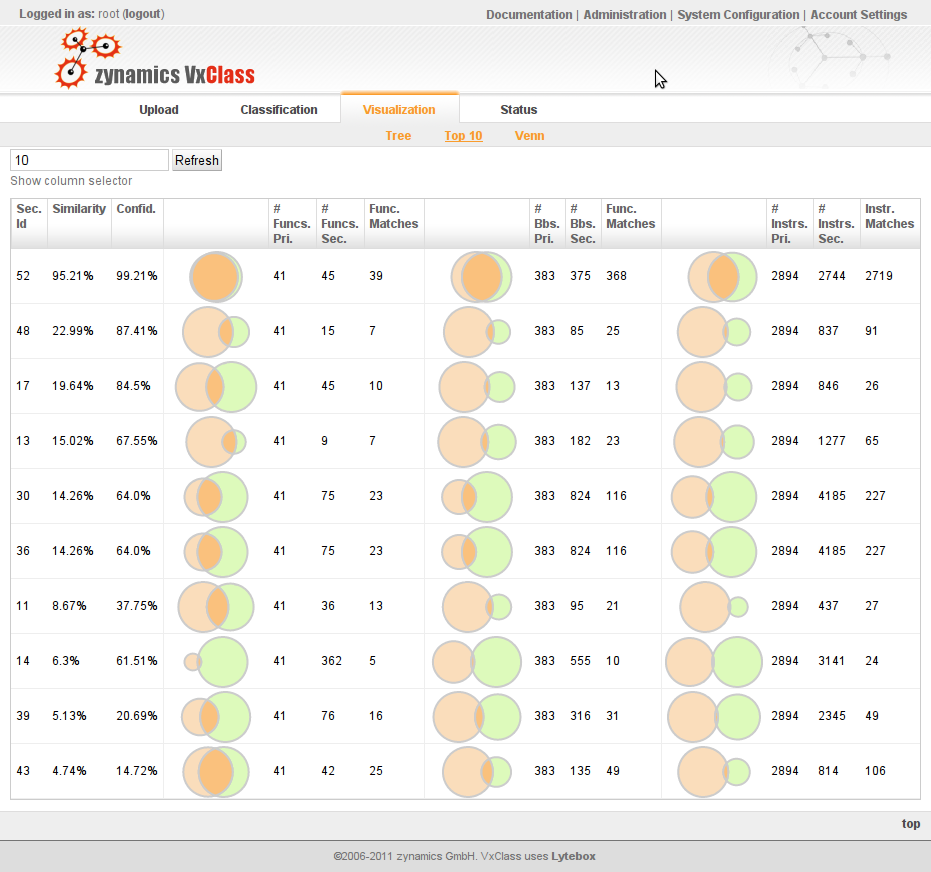

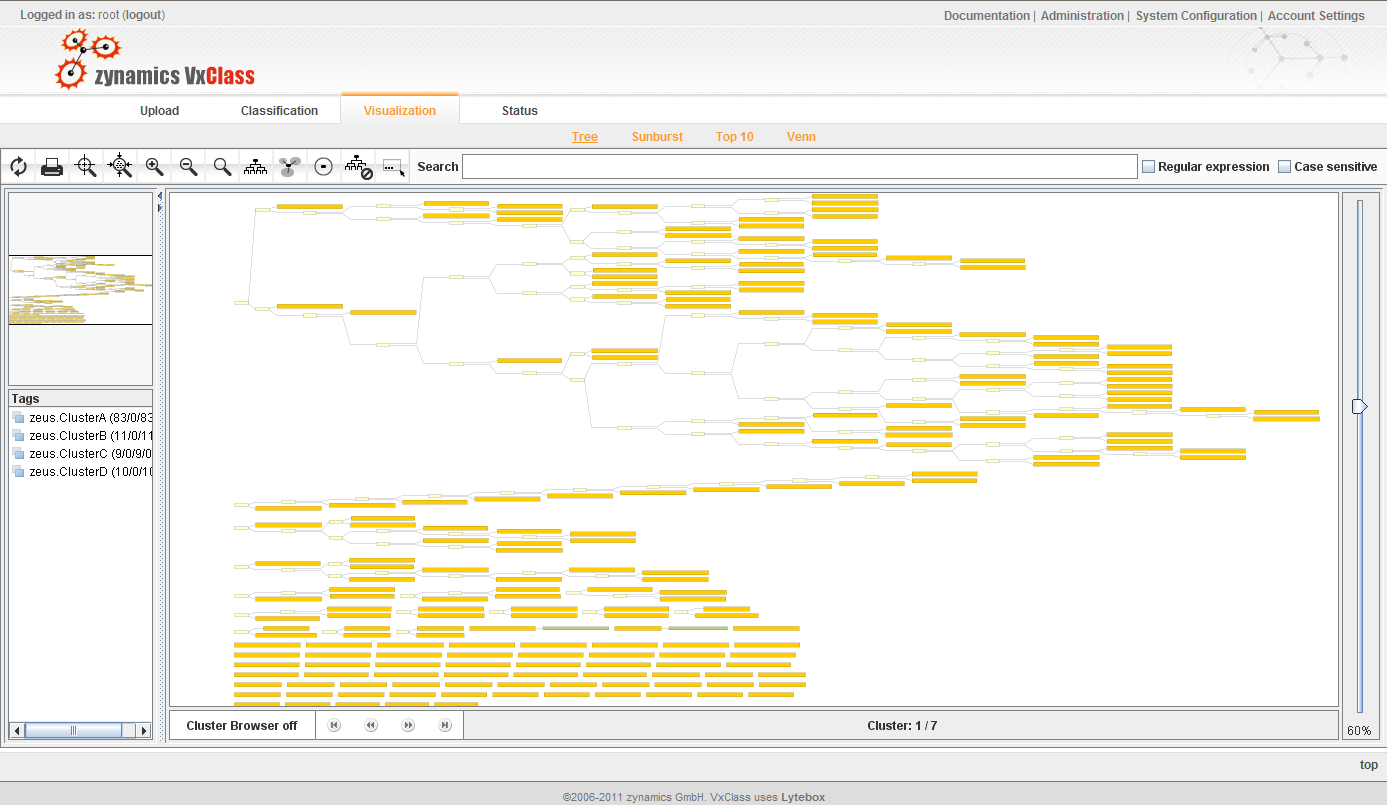

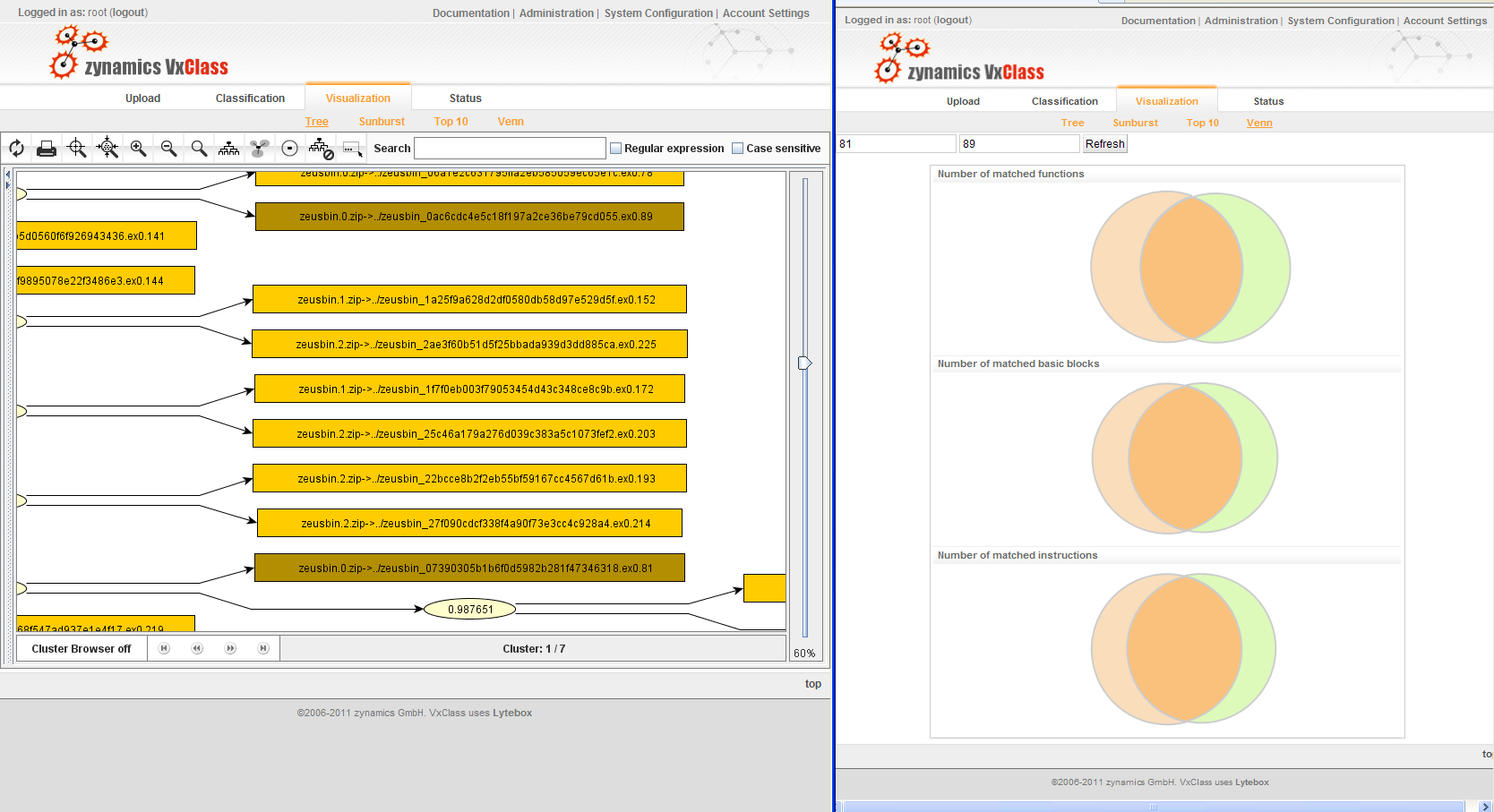

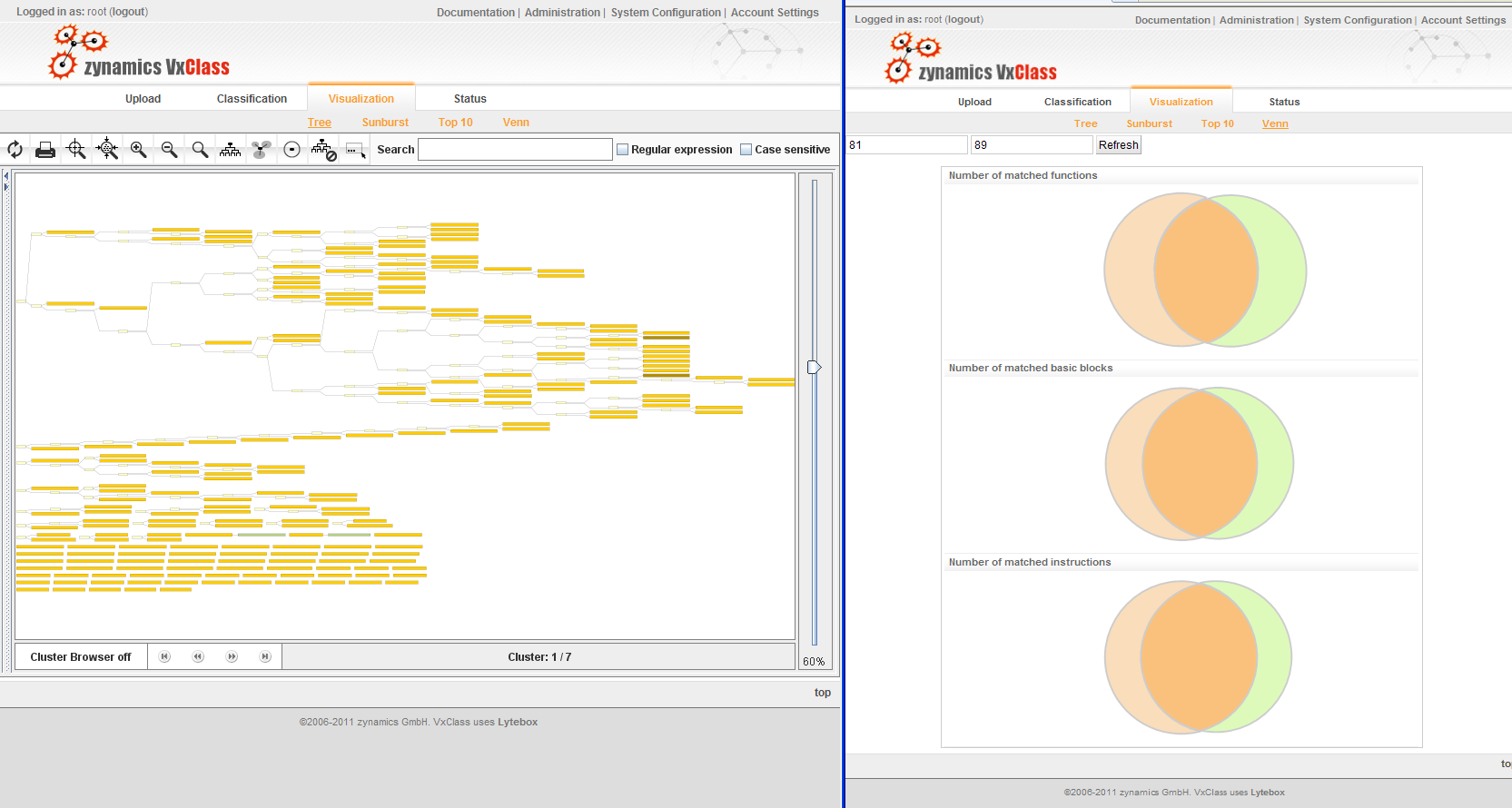

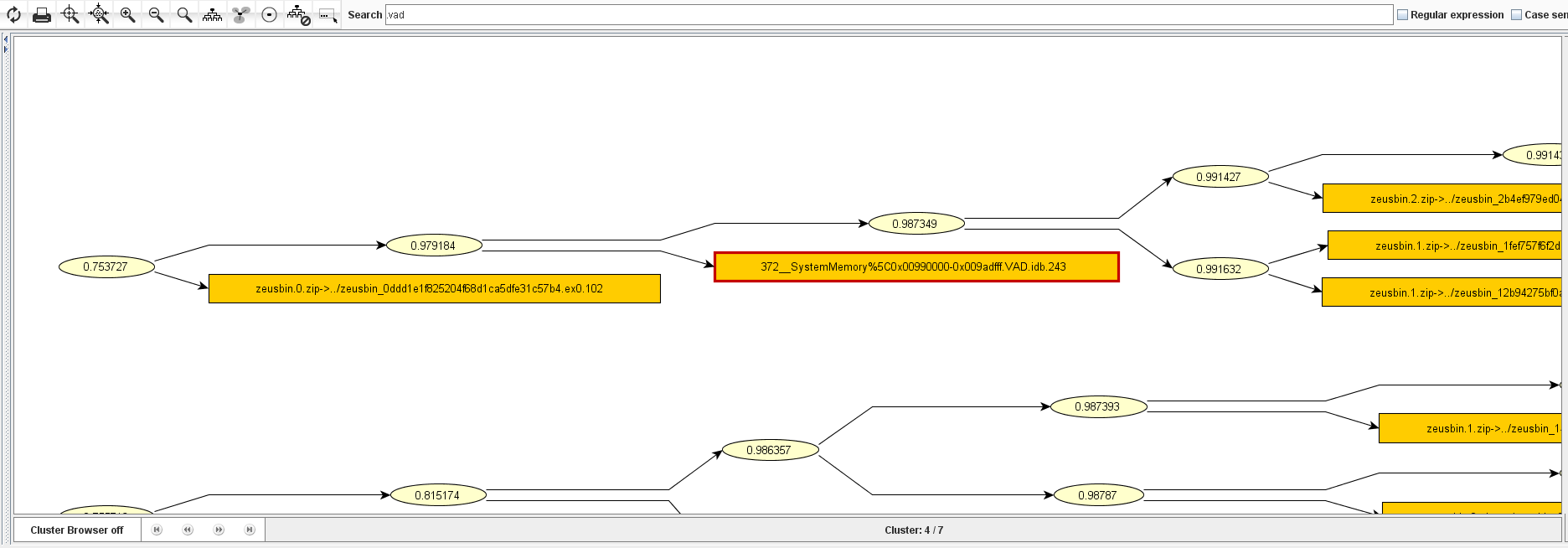

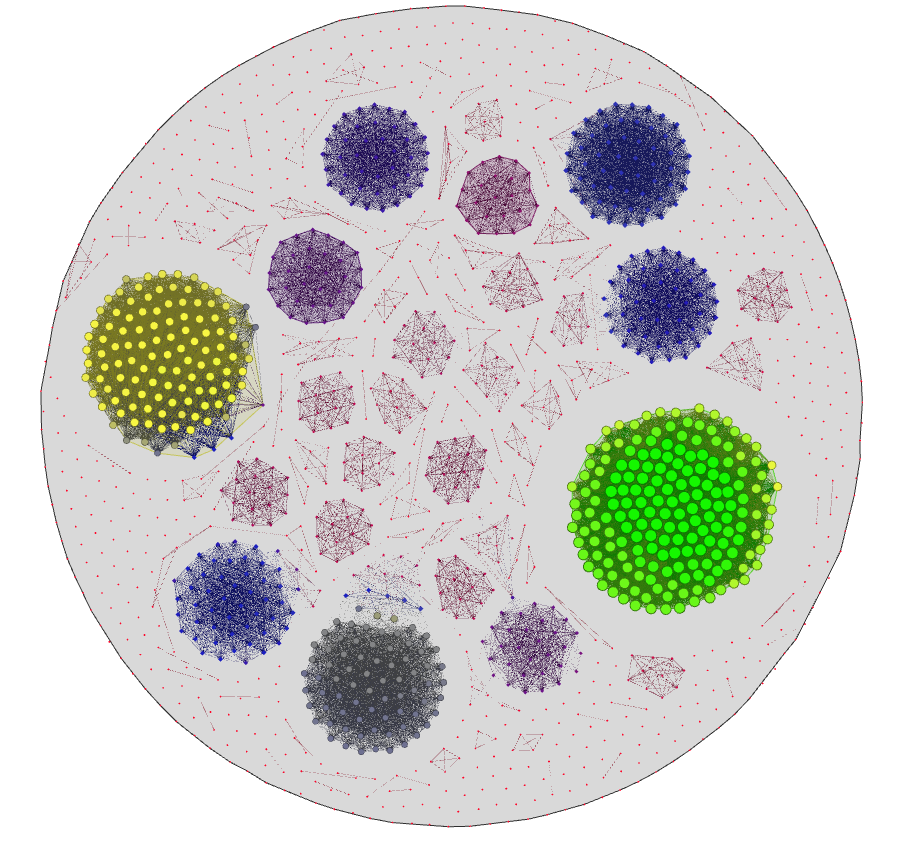

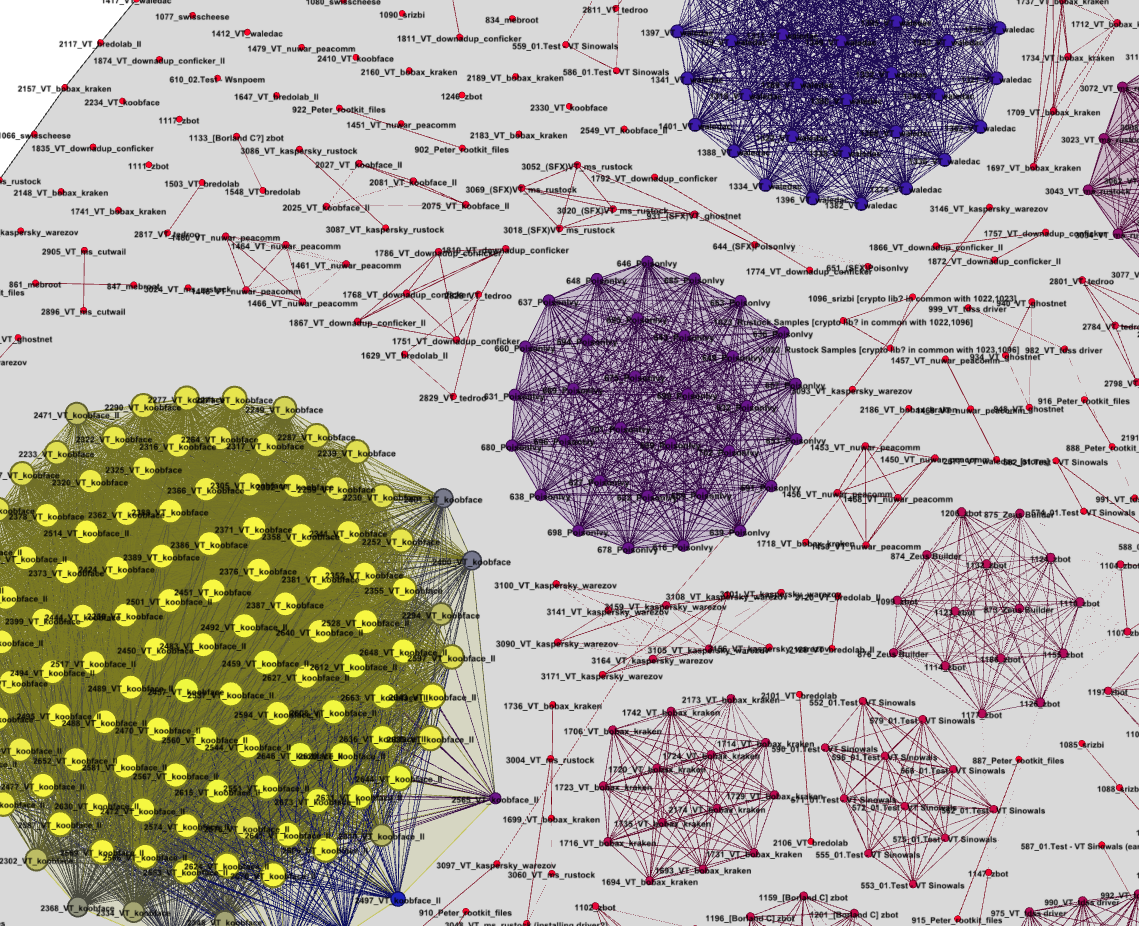

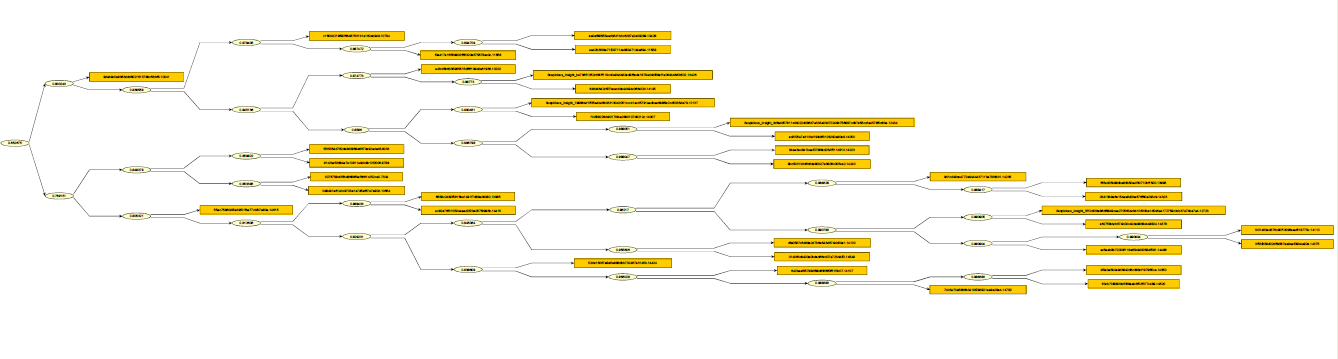

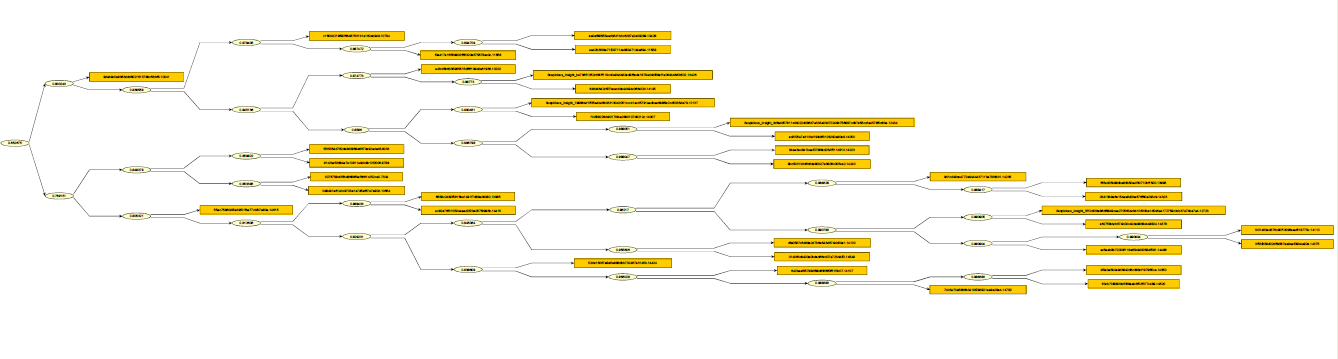

I will go through the process step-by step for one particular cluster. The cluster itself can be viewed here. A low-resolution shot of it would be the following:

The cluster we're going to generate a signature for

So how do the detection rates for this cluster in traditional AVs look ? Well, I threw the files into VirusTotal, and created the following graphic indicating the detection rates for these files: Each row in this matrix represents an executable, and each column represents a different antivirus product (I have omitted the names). A yellow field at row 5 and column 10 means “the fifth executable in the cluster was detected by the tenth AV”, a white field at row 2 and column 1 means “the second executable in the cluster was not detected by the first AV”.

Detection Matrix for 32 samples from the cluster

Rows are executables

Columns are AV engines

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| .

| –

| –

| .

| .

| –

| –

| –

| .

| .

| .

| –

| –

| .

| .

| –

| –

| –

| –

| .

| .

| .

| –

| –

| –

| .

|

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

|

| .

| .

| .

| .

| –

| .

| .

| .

| .

| –

| .

| .

| –

| –

| .

| .

| –

| .

| .

| .

| .

| .

| .

| .

| .

| .

| .

| .

| .

| –

| .

| –

| –

| .

| .

| –

| –

| –

| .

| –

|

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| .

| –

| –

| .

| .

| –

| –

| –

| .

| .

| –

| –

| .

| .

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| .

| –

|

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| .

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

|

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

|

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

|

| –

| –

| –

| –

| –

| .

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

|

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| .

| .

| .

| –

| –

| .

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| .

|

| .

| –

| .

| .

| –

| .

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| .

| –

| –

| .

| .

|

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| .

|

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

|

| –

| –

| –

| –

| –

| .

| .

| .

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| .

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| .

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

|

| –

| –

| .

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| .

| .

| .

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

|

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

|

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

|

| –

| –

| .

| –

| –

| .

| .

| .

| –

| –

| –

| –

| –

| –

| .

| –

| –

| .

| .

| .

| .

| .

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

|

| –

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

|

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| .

| .

| .

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

|

| –

| –

| .

| –

| –

| .

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| –

| .

| .

| .

| .

| –

| –

| –

| –

| .

| .

| –

| .

| –

| –

| –

| –

| –

| .

| –

| –

| .

|

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| .

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

|

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| .

| –

| –

| –

| .

| –

| –

| –

| .

| .

| .

| –

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| .

|

| –

| –

| –

| –

| –

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| .

| –

| .

| –

| .

| –

| –

| –

| –

| .

| .

| –

| –

| –

| –

| –

| –

| –

| –

| –

| –

| – |

What we can see here is the following:

- No Antivirus product detects all elements of this cluster

- Detection rates vary widely for this cluster: Some AVs detect 25 out of 32 files (78%), some … 0/32 (0%)

If we inspect the names along with the detection results (which you can do in this table), we can also see which different names are assigned by the different AVs to this malware.

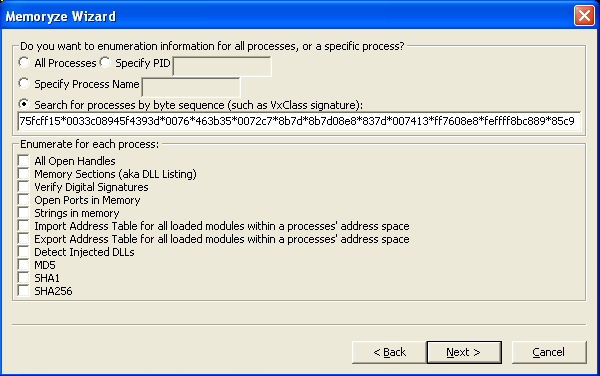

So, let’s run our signature generator on this cluster of executables.

time /opt/vxclass/bin/vxsig “7304 vs 9789.BinDiff” “9789 vs 10041.BinDiff” “10041 vs 10202.BinDiff” “10202 vs 10428.BinDiff” “10428 vs 10654.BinDiff” “10654 vs 10794.BinDiff” “10794 vs 11558.BinDiff” “11558 vs 11658.BinDiff” “11658 vs 12137.BinDiff” “12137 vs 12434.BinDiff” “12434 vs 12723.BinDiff” “12723 vs 13426.BinDiff” “13426 vs 13985.BinDiff” “13985 vs 13995.BinDiff” “13995 vs 14007.BinDiff” “14007 vs 14023.BinDiff” “14023 vs 14050.BinDiff” “14050 vs 14100.BinDiff” “14100 vs 14107.BinDiff” “14107 vs 14110.BinDiff” “14110 vs 14145.BinDiff” “14145 vs 14235.BinDiff” “14235 vs 14240.BinDiff” “14240 vs 14323.BinDiff” “14323 vs 14350.BinDiff” “14350 vs 14375.BinDiff” “14375 vs 14378.BinDiff” “14378 vs 14415.BinDiff” “14415 vs 14424.BinDiff” “14424 vs 14486.BinDiff” “14486 vs 14520.BinDiff” “14520 vs 14549.BinDiff” “14549 vs 14615.BinDiff” “14615 vs 14700.BinDiff”

The entire thing takes roughly 40 seconds to run. The resulting signature can be viewed here.

So, to summarize:

- Using VxClass, we can quickly sort new malicious executables into clusters based on the amount of code they share

- Using the results from our BinDiff and some clever algorithmic trickery, we can generate “traditional” byte signatures automatically

- These signatures are guaranteed to match on all executables that were used in the construction of the signature

- The signatures have some predictive power, too: In a drastic example we generated a signature from 15 Swizzor variants that then went on to detect 929 new versions of the malware

- These are post-unpacking signatures — e.g. your scanning engine needs to do a halfways decent job at unpacking in order for these signatures to work

If you happen to work for an AV company and think this technology might be useful for you, please contact info@zynamics.com 🙂